[This essay is part of The Tech Progressive Writing Challenge Cohort 2. Join the conversation in the build_ Discord.]

Wikipedia turned 20 earlier this year. No one initially believed it would work. Today, with 300,000 active contributors and 58 million articles written in 325 languages, Wikipedia is the 7th most visited website in the world, and it’s a reliable source on many topics.

But there’s a significant disparity between languages: 6 million articles in English, 1 million in Modern Standard Arabic, 50,000 in Kurdish, and only 360 articles in Fufulde, a language spoken by more than 40 million people across 20 countries in West and Central Africa.

Wikipedia was founded based on Hayek’s view that information is decentralized: each individual only knows a small fraction of what is known collectively. As a result, decisions are best made by those with local knowledge rather than a central authority.

However, half of the world’s population doesn’t speak a language with significant coverage on Wikipedia (more than 1m articles). So information cannot flow to these people, and they cannot contribute to the world’s knowledge. Therefore, a subset of each Wikipedia is not covered by other editions. For instance, 20,000 articles on the Kurdish Wikipedia do not exist on the Arabic one. There are 20 million distinct topics across all language editions of Wikipedia, but “only” 6 million covered in English. The knowledge gap is enormous.

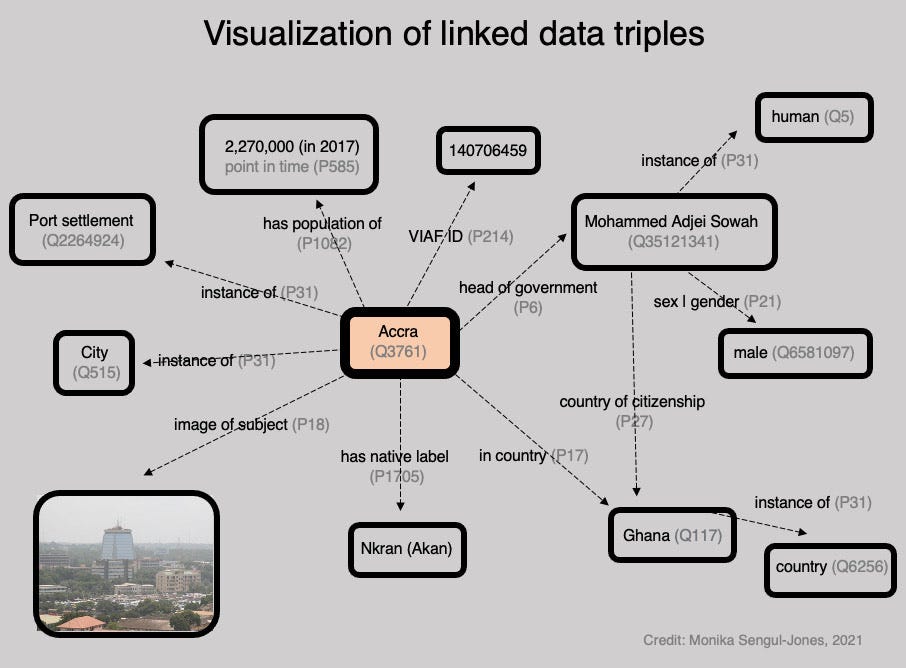

Wikidata was launched in 2012 to solve this problem. Wikidata is a structured database linked to Wikipedia. For example, Napoleon’s entry on Wikidata includes all basic information about him: name, place of birth, date of birth, etc. Because data is structured, it is also easily queried. Assistants such as Siri and Alexa use Wikidata’s structured dataset to answer questions: you can ask Wikidata to give you the list of largest cities with a female mayor or all oldest living US ex-presidents in chronological order. Using Wikidata, we can also fill in “infoboxes” on Wikipedia (e.g., here on a French article, about cheese, of course!). Wikidata entries can then be translated automatically into any language to generate content on Wikipedia.

But that’s not enough. We can only generate simple articles using these methods. And many “small” languages like Fufulde aren’t covered by machine translation systems like Google Translate. Besides reading, we want everyone to contribute. How can a Fufulde speaker document local politics in their hometown? Here come’s Abstract Wikipedia! Its goals:

Allowing more people to read more content in the language they choose.

Allowing more people to contribute content for more readers and thus increasing the reach of underrepresented contributors.

In Abstract Wikipedia, articles are written in a language-independent format, using items from Wikidata and semantic functions from Wikifunctions. Wikifunctions is an open repository of code that anyone can edit. It’s the Wiki version of GitHub. Wikifunctions will, in particular, host “renderers”: functions that turn the language-independent “Abstract content” into natural language, using the linguistic knowledge available in Wikidata. So Abstract Wikipedia = Wikidata + Wikifunctions.

This English sentence: “San Francisco is the cultural, commercial, and financial center of Northern California. It is the fourth-most populous city in California, after Los Angeles, San Diego and San Jose.” would be stored on Abstract Wikipedia as:

Items with “Q” followed by a number are entries on Wikidata (e.g., Q65 is Los Angeles). “Instantiation()” and “Ranking()” are two renderers. Instantiation() generates in English “x is y.”, such as: “Paris is a city,” “Wikipedia is a website,” etc. We just need to translate the Instantiation() renderer just once in a language, and we can then use it to generate thousands of different sentences in that language. It would then be easier to cover not only 325 languages but the 7,139 languages spoken globally.

With renderers, we could build any article. See here for a more complex example. Thanks to Wikidata, we could also update all editions of Wikipedia in one click. If the president of Slovakia changes, we just need to update the line “head of state” in the Slovakia entry, and all renderers will now display that new name. Wikifunctions is not yet live, but a temporary test is available here.

If all of that sounds like “web3”, it’s maybe because Wikipedia was the first “DAO.” And we could go even further. Today, information can only be published on Wikipedia if backed by reliable sources, such as other encyclopedias, scientific papers, news organizations or newspapers. What if a source goes offline? Then broken links have to be rescued using the Internet Archive. If information disappears from a source—for instance, because of censorship—then hopefully, the previous version is available in the Internet Archive, but that’s sometimes not the case.

On the other hand, if data goes onchain, it will be there forever and won’t change. The Associated Press started doing just that in October. They now publish economic and sports datasets onchain. Could Wikidata be updated automatically with onchain data? Using this “ledger of record”, as Balaji claims, “argument from cryptography begins superseding argument from authority”. And with Abstract Wikipedia, these sources of absolute truth would be available in all languages!

Abstract Wikipedia is still in its infancy and should integrate with Wikipedia in 2023. To know more about the project, read this article written by its founder, Denny Vrandečić. To participate and build the future of Wikipedia, click here!